Building an End-to-End Blog Generation Application using Bedrock, AWS Lambda, API Gateway, S3 and Postman API Network

Part 2 of the "Generative AI on Cloud" Series

Welcome back to the "Generative AI on Cloud" series! In Part 1, I introduced Generative AI and explored its wide range of use cases, such as text summarization, personalized marketing, and creative content generation. We also laid out the structured project lifecycle that guides the successful implementation of Generative AI solutions.

Today, in Part 2, I want us to take a significant step forward. We'll dive deeper into implementation—building an end-to-end blog generation application using AWS Cloud Services. This article will guide you through developing a powerful solution leveraging Amazon Bedrock, Lambda, API Gateway, and S3 for modern AI-powered workflows. By the end, you'll have a clear roadmap to integrate Generative AI into real-world applications.

Overview of the Use Case: Blog Generation

In this project, we aim to create a blog generation system. The workflow includes:

Accepting a blog topic as input from a user via an API.

Using Amazon Bedrock’s foundational models to generate a blog on the topic.

Storing the generated blog as a text file in Amazon S3.

Returning a successful response to the user.

This simple yet highly practical solution can be scaled to accommodate other content types, such as product descriptions, personalized emails, or newsletters.

System Architecture

The architecture follows a serverless pattern for cost efficiency and scalability. Here's an outline of the components:

Amazon API Gateway: Accepts HTTP requests from users and routes them to the backend.

AWS Lambda: Handles the core application logic, including invoking Amazon Bedrock for text generation.

Amazon Bedrock: Provides access to pre-trained foundational models, such as Llama 3 by Meta AI, for generating content.

Amazon S3: Stores the blog files for retrieval and archiving.

Postman: To test the API calls from the user to the system and response. (Note": I am using this instead of a Chat UI to build appreciation towards API and how it works)

Implementation Steps

Here is a step-by-step guide to building the application:

Step 1: Set Up AWS Bedrock

Amazon Bedrock is the backbone of this architecture, providing pre-trained Generative AI models.

Go to AWS Bedrock using the search bar at the top of AWS console.

Model Access Configuration:

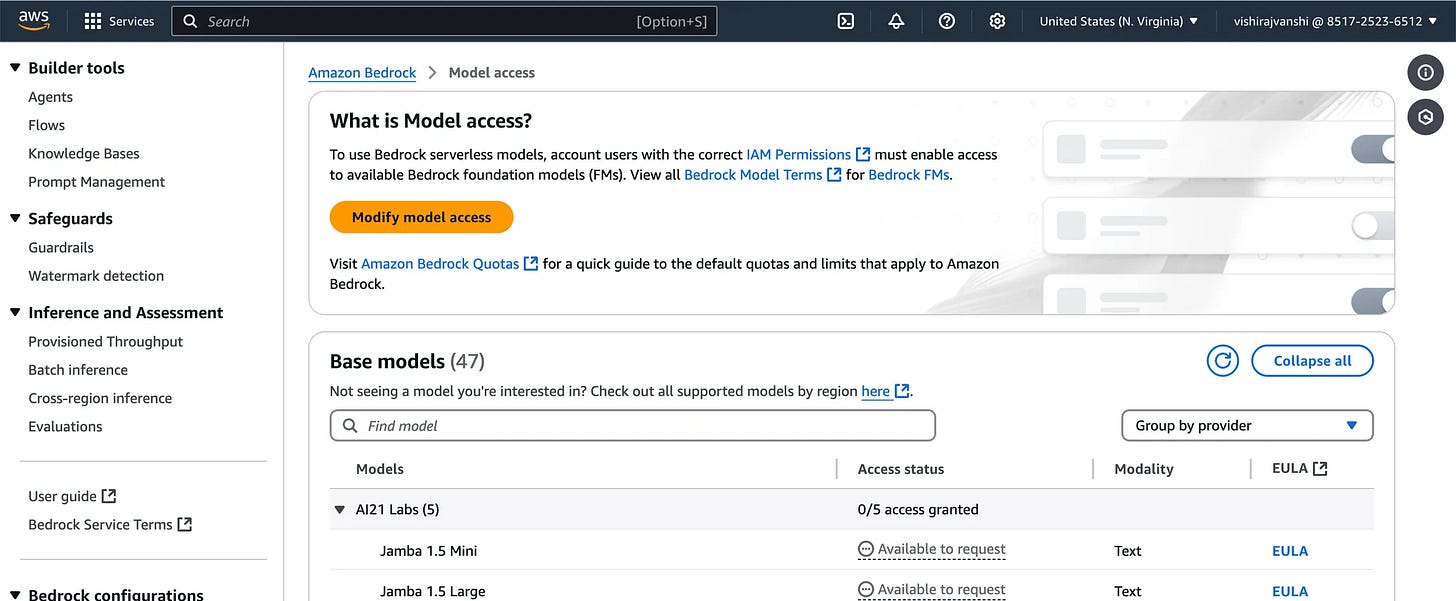

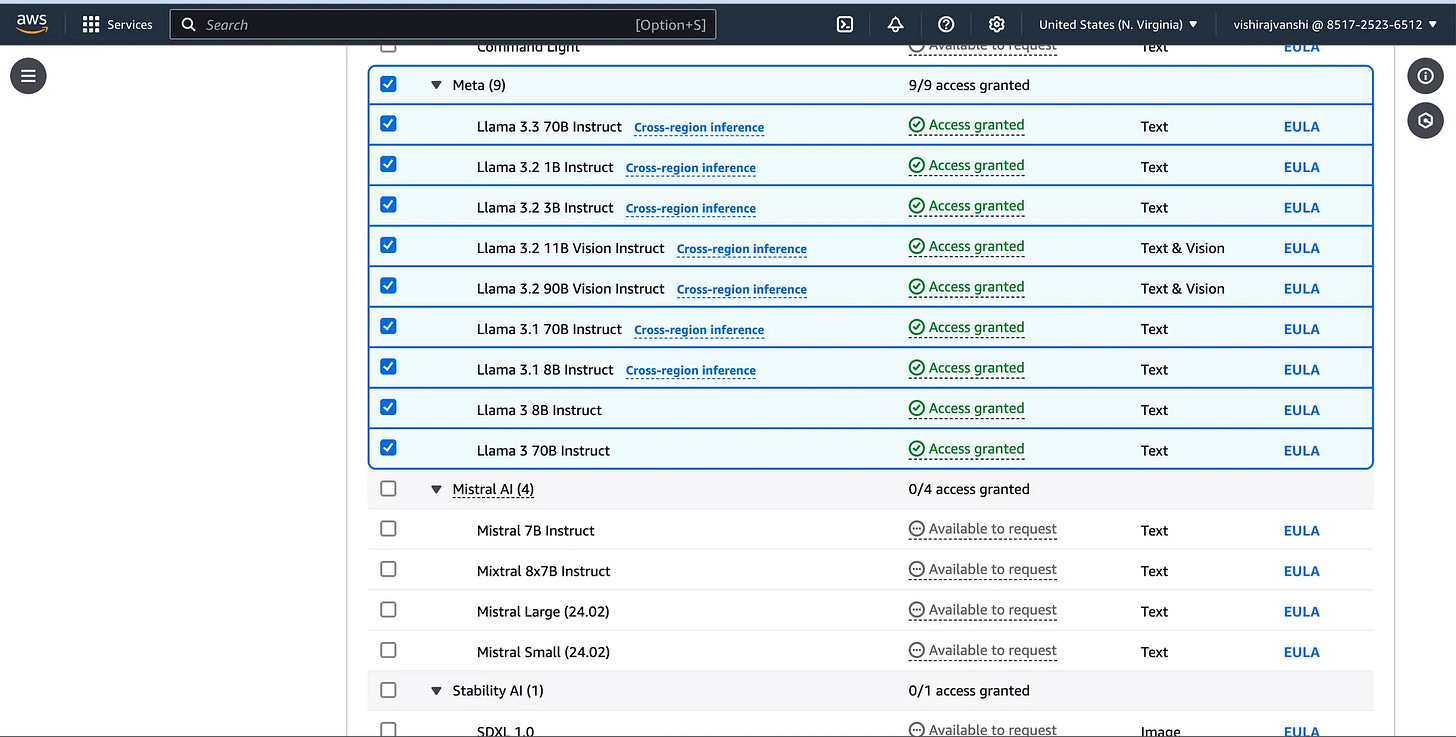

Navigate to Amazon Bedrock in the AWS Console and got o Model access.

Click on Modify Model Access at top and you can select the models for which you want to enable for use.

Request access to models like Meta Llama 3 70B Instruct.

Configure permissions in the "Manage Model Access" section.

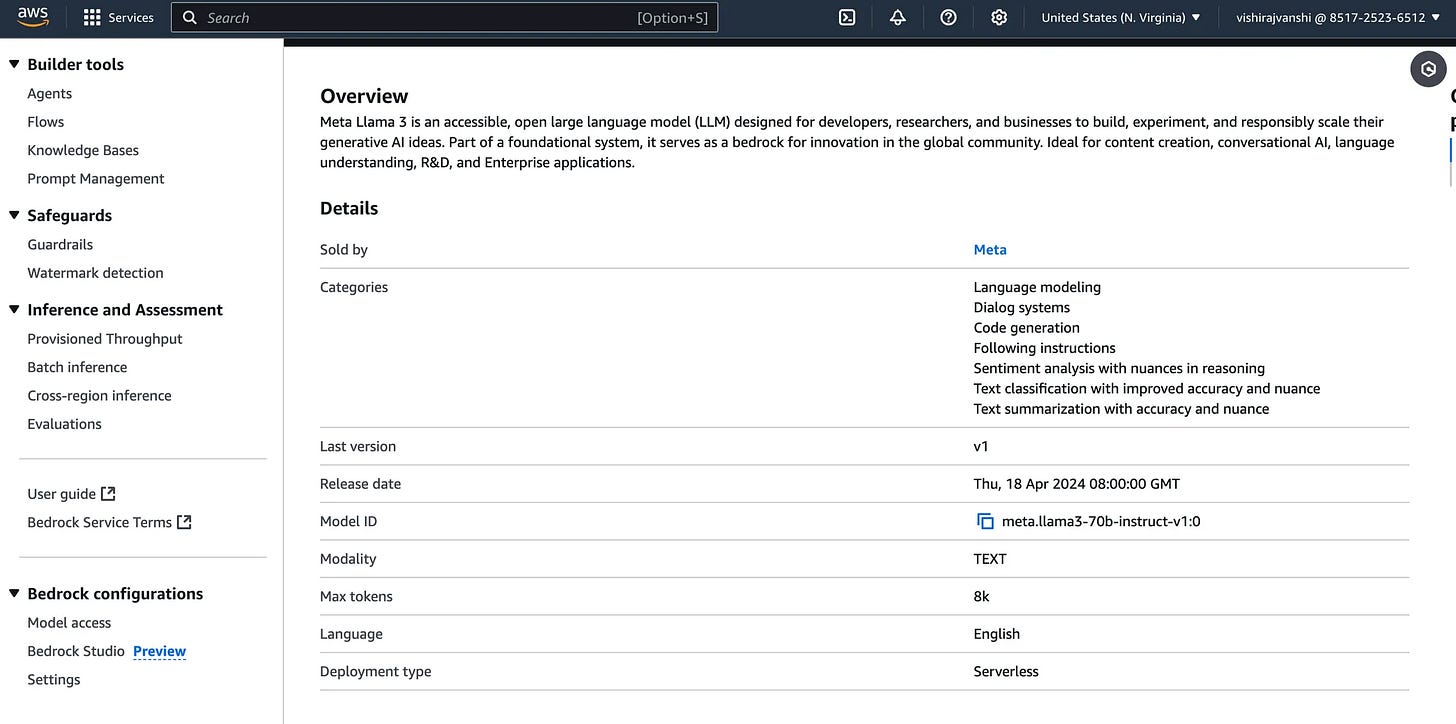

Choose the Right Model:

For this project, we will use Meta's Llama 3 70B Instruct., known for its balance of quality, cost, and performance in generating long-form text.

Step 2: Create the AWS Lambda Function

AWS Lambda executes business logic when the API Gateway sends a request.

Key Lambda Function Features to understand:

It accepts blog topics as input arguments.

Calls Amazon Bedrock’s foundation model to generate blog content based on the topic.

Writes the generated blog to an S3 bucket.

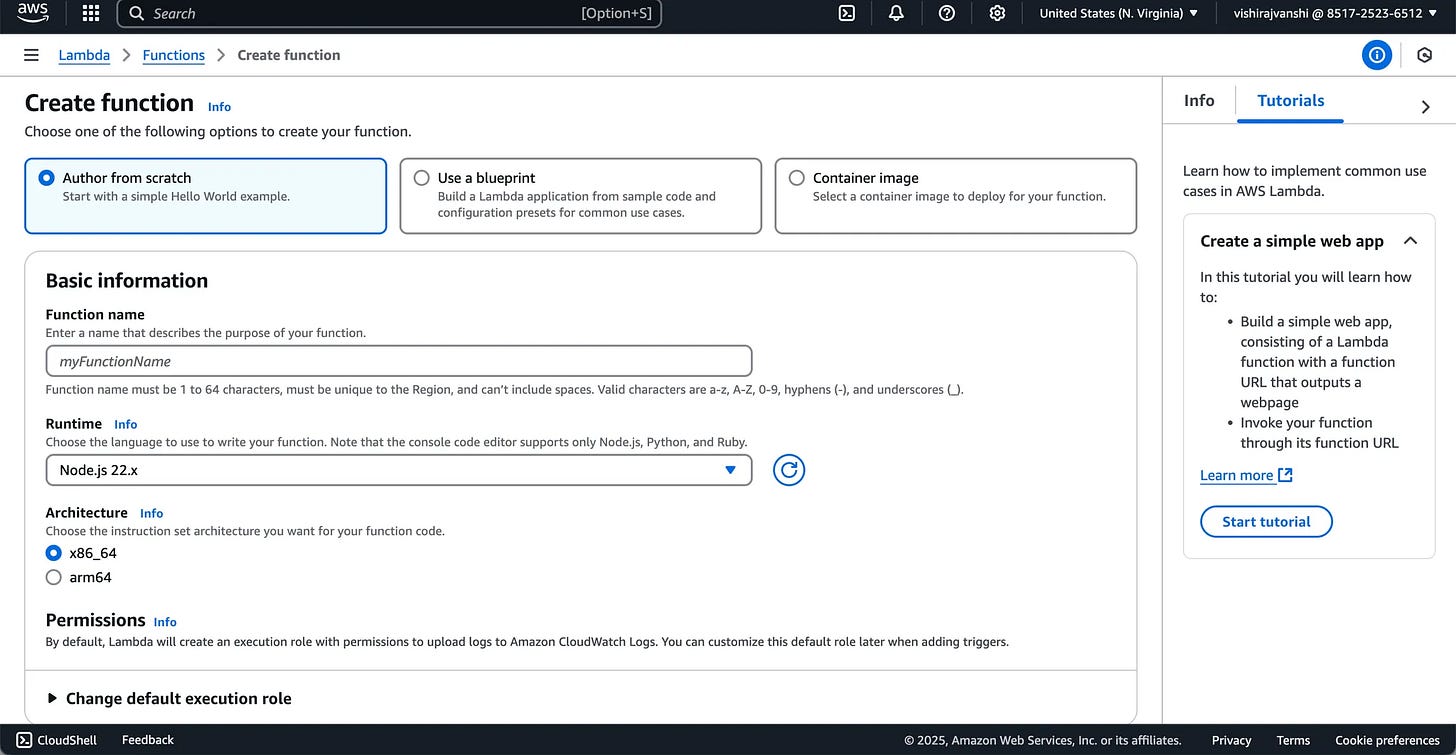

To Configure Lambda Function

Use search bar at the top of AWS Console tp find AWS Lambda and click on “Create Function”

Enter the Functional name

Under Runtime select Python 3.12

Keep everything else as it is for now and click “Create Function”

Once the function is created, click on the Function name and scroll down to Code Source section. This is where you need to enter the actual code that will accept the input arguments, invokes the foundational model and push the output to S3 bucket (which we will create in the later steps)

Code Source:

Importing Required Libraries

At the start, the necessary modules and libraries are imported. These include boto3 for interacting with AWS services, datetime for timestamp generation, and json for handling data serialization.

Code snippet

pythonimport boto3 import botocore.config import json from datetime import datetime

ii. Generating a Blog Using Amazon Bedrock

The core logic for content generation is encapsulated in the function

generate_blog_via_bedrock. Here's how it works:Compose the Prompt: A dynamic prompt is prepared to instruct the foundation model to create a blog based on a user-provided topic.

Set Model Parameters: Variables like temperature, maximum output length, and

top_pare defined to influence the quality of the generated output.Invoke the Foundation Model: The function connects to the specified Bedrock model and sends the prompt for processing.

Code Snippet:

pythondef generate_blog_via_bedrock(topic: str) -> str: # Compose the prompt prompt_template = f"""<s>[INST]Human: Write a 200-word blog on the topic {topic}. Assistant:[/INST]""" # Define model parameters request_body = { "prompt": prompt_template, "max_gen_len": 512, "temperature": 0.5, "top_p": 0.9 } try: # Create a client for Bedrock runtime bedrock_client = boto3.client("bedrock-runtime", region_name="us-east-1", config=botocore.config.Config( read_timeout=300, retries={'max_attempts': 3})) # Invoke the model response = bedrock_client.invoke_model( body=json.dumps(request_body), modelId="meta.llama3-70b-instruct-v1:0" ) # Parse the response response_data = json.loads(response.get('body').read()) generated_blog = response_data['generation'] return generated_blog except Exception as e: print(f"Error generating the blog: {e}") return ""

iii. Saving the Generated Blog to Amazon S3

This function takes in the key name, target S3 bucket, and the generated blog content, then stores it in an S3 bucket as a text file.

Code Snippet:

pythondef save_blog_to_s3(s3_key: str, s3_bucket: str, blog_content: str): s3_client = boto3.client('s3') try: s3_client.put_object(Bucket=s3_bucket, Key=s3_key, Body=blog_content) print("Blog saved successfully to S3.") except Exception as e: print(f"Error saving the blog to S3: {e}")iv. Lambda Handler: The Entry Point

The

lambda_handlerfunction uses the above utilities to orchestrate the workflow:Accepts the blog topic as input from an API event.

Calls the

generate_blog_via_bedrockfunction to create the blog content.Saves the generated blog to Amazon S3 with a timestamped file name.

Code Snippet:

pythondef lambda_handler(event, context): try: # Parse the blog topic from the API request event_body = json.loads(event['body']) blog_topic = event_body.get('blog_topic', 'Default Topic') # Generate the blog content generated_blog = generate_blog_via_bedrock(blog_topic) # If blog content is successfully generated if generated_blog: # Generate S3 key with timestamp timestamp = datetime.now().strftime('%H%M%S') s3_key = f"blog-output/{timestamp}.txt" s3_bucket = 'your-s3-bucket-name' # Save the blog to S3 save_blog_to_s3(s3_key, s3_bucket, generated_blog) return { 'statusCode': 200, 'body': json.dumps('Blog generation successfully completed.') } else: print("No blog content generated.") return { 'statusCode': 500, 'body': json.dumps('Error: Failed to generate blog content.') } except Exception as e: print(f"Lambda error: {e}") return { 'statusCode': 500, 'body': json.dumps(f"Internal server error: {e}") }

Step 3: Configure Identity and Access Management (IAM) Roles

Ensure the Lambda function has proper IAM permissions:

Add permissions for

bedrock:InvokeModelands3:PutObject.Attach the following policy to the Lambda role:

json{ "Version": "2012-10-17", "Statement": [ { "Effect": "Allow", "Action": [ "bedrock:InvokeModel", "s3:PutObject" ], "Resource": "*" } ] }

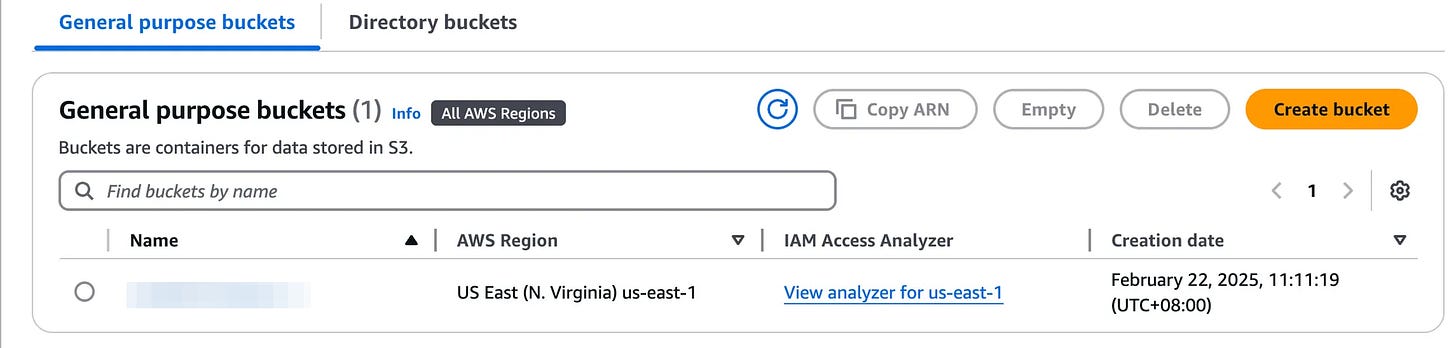

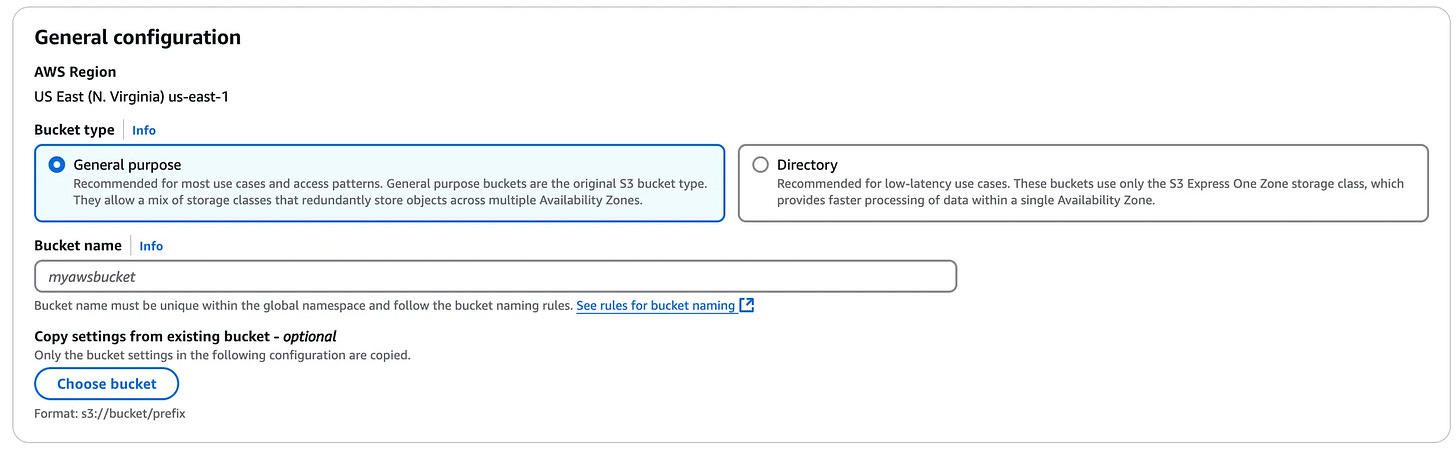

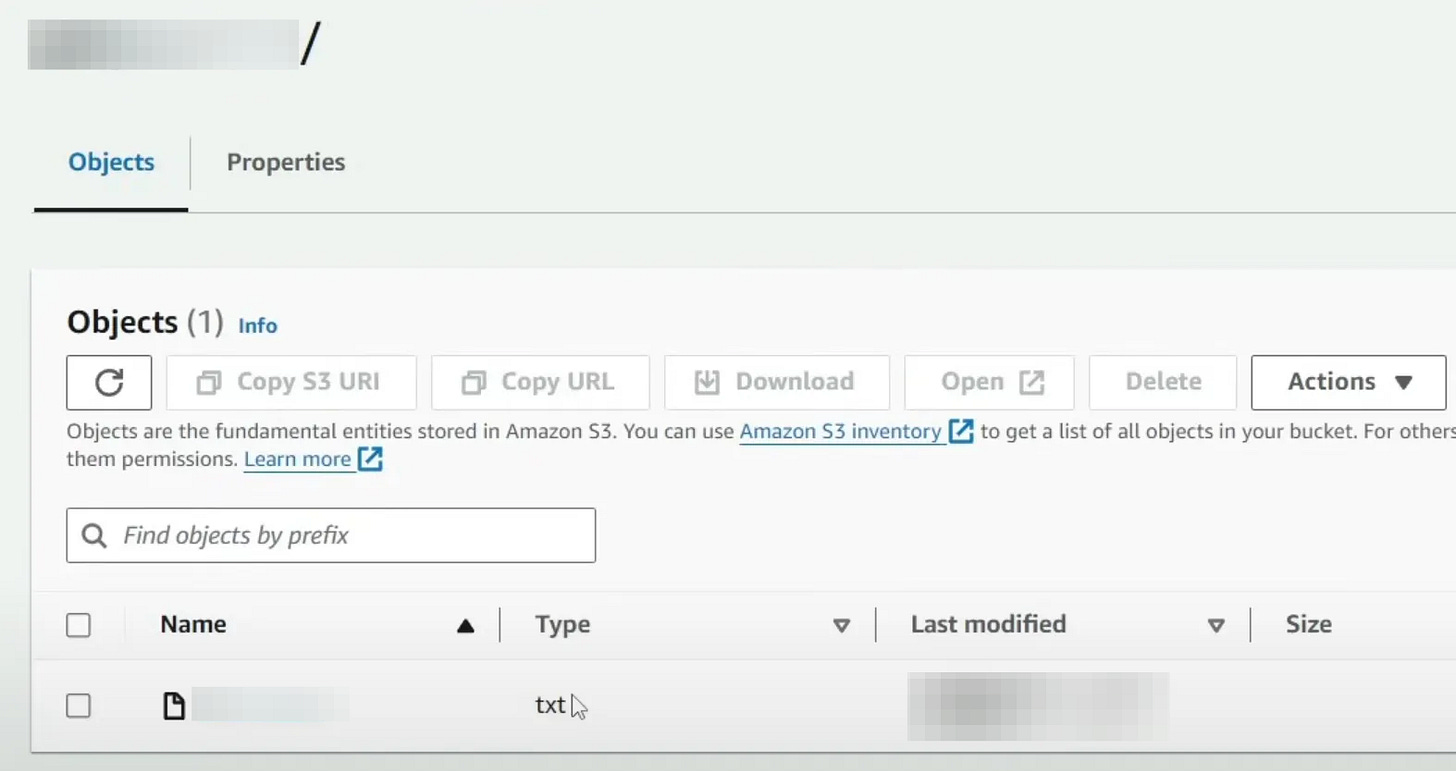

Step 4: Create Amazon S3 Bucket

Amazon S3 stores the generated blog content.

Use the S3 Console to create a bucket

Enter the bucket name (e.g.,

aws-bedrock-demo) —> Create BucketName of the Bucket should be same as what was defined in the Lambda code above

save_blog_to_s3(s3_key, s3_bucket, generated_blog)

Ensure the bucket name is unique across the AWS region

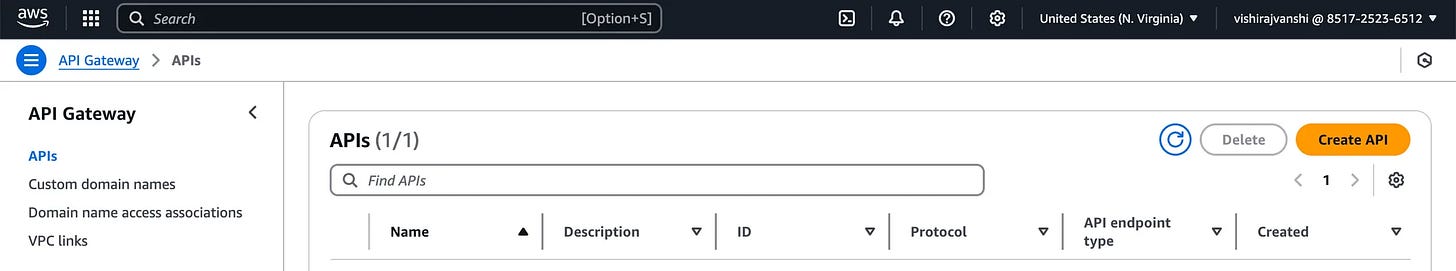

Step 5: Set Up Amazon API Gateway

API Gateway creates an endpoint for users to trigger the Lambda function.

Create an API:

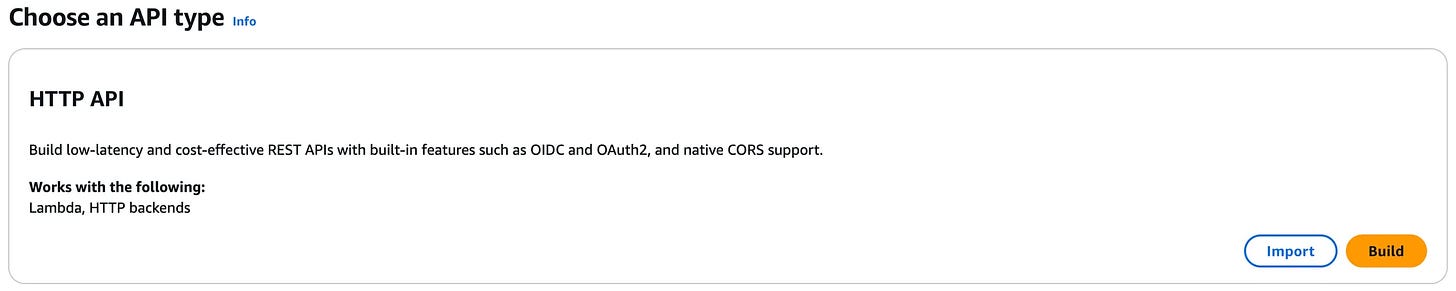

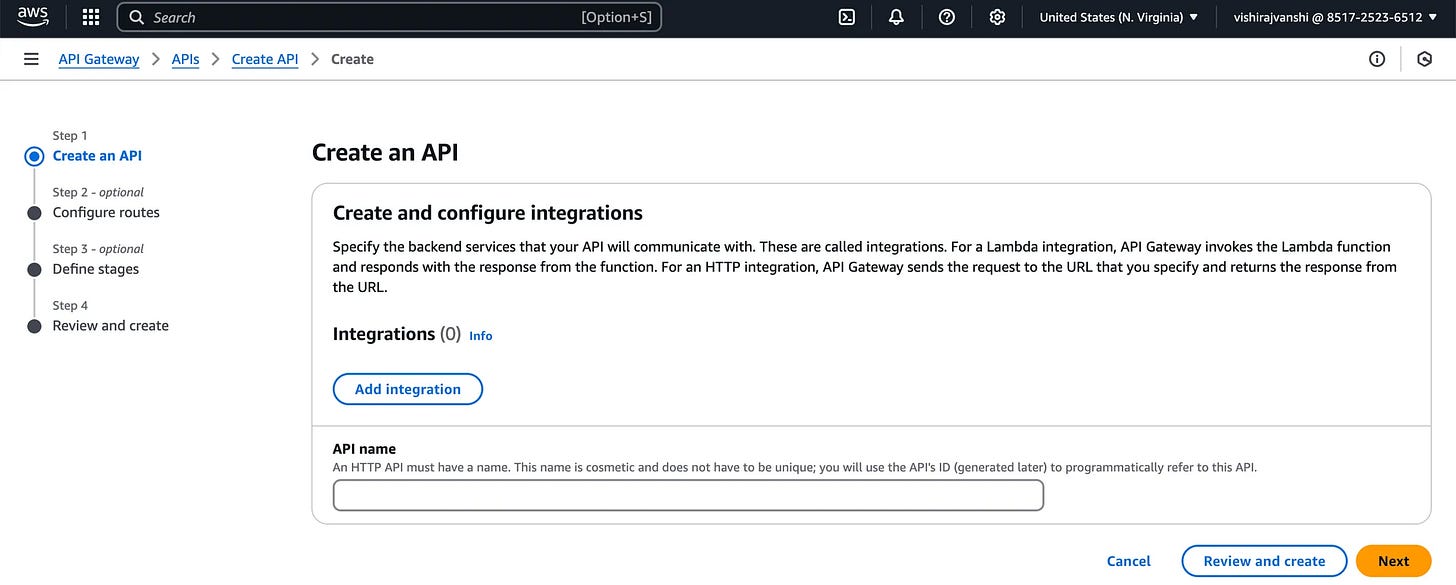

Navigate to API Gateway > Create API > HTTP API.

Click on “Build”

Enter <API name> —> Click Next —> Click Create

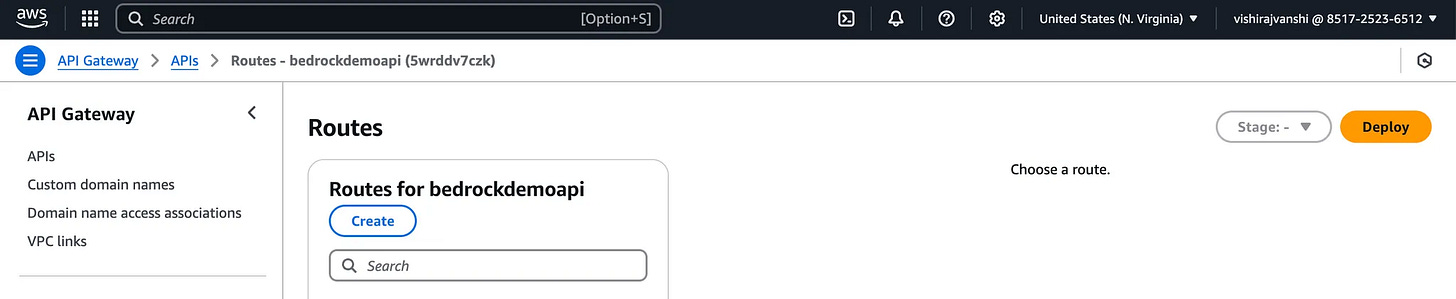

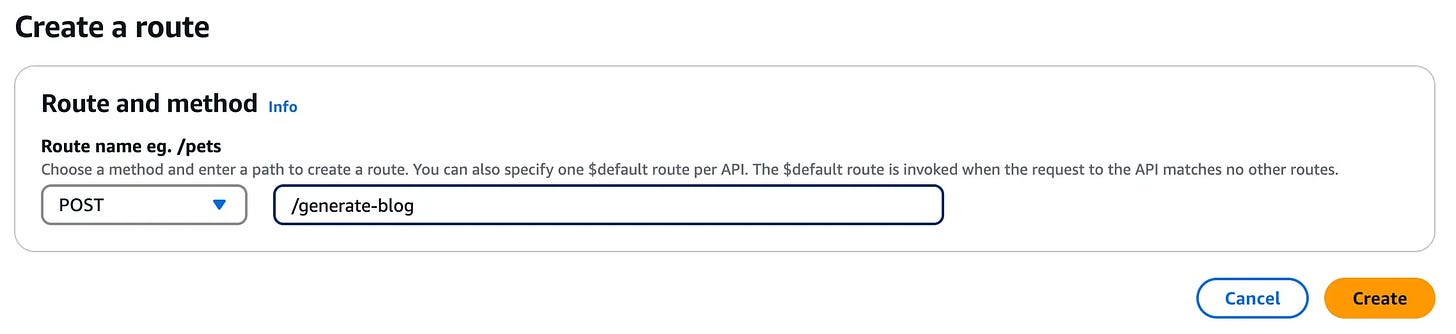

Create Routes

Click Create —> Select POST—> Define a route, e.g.,

/generate-blog, with a POST method —> Click Create

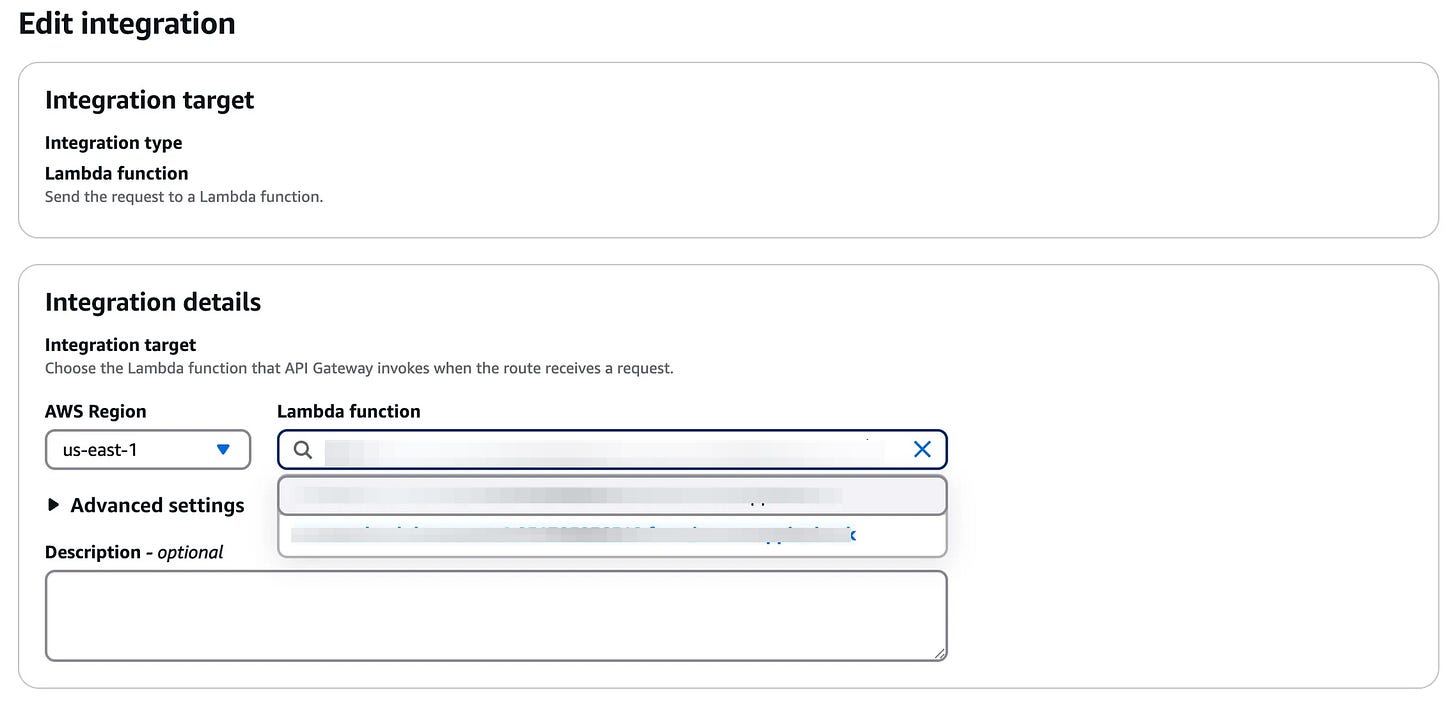

Integrate Route with the Lambda Function:

Connect the route to the Lambda function.

Deploy the ARN URL

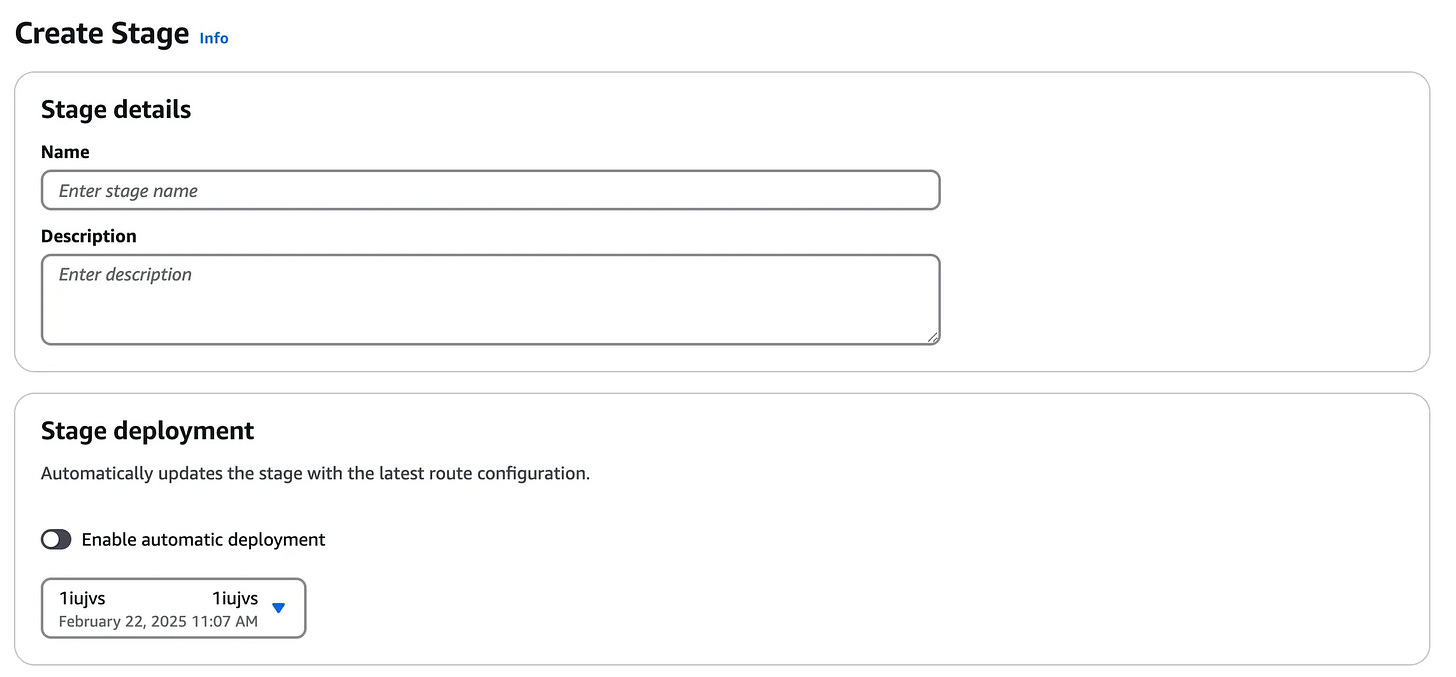

Go to Stages under Deploy section in the left bar —> Click “Create”

Put any name such as dev, staging, UAT or Production —> Click Create

This will generate a URL (e.g.,

https://<your-api-id>.execute-api.us-east-1.amazonaws.com/dev).

Step 6: Use Postman to test the application

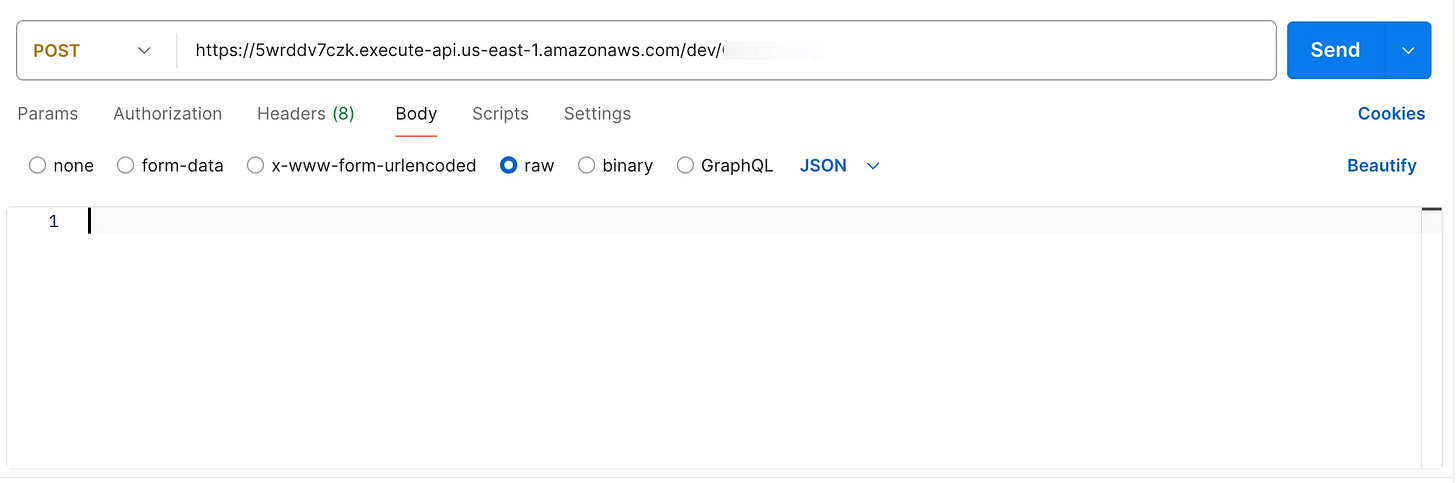

Use Postman or a similar HTTP client to test the API.

Create a new request —> POST type

Click “raw” under Body tab, define the key value pair that will be sent to the API

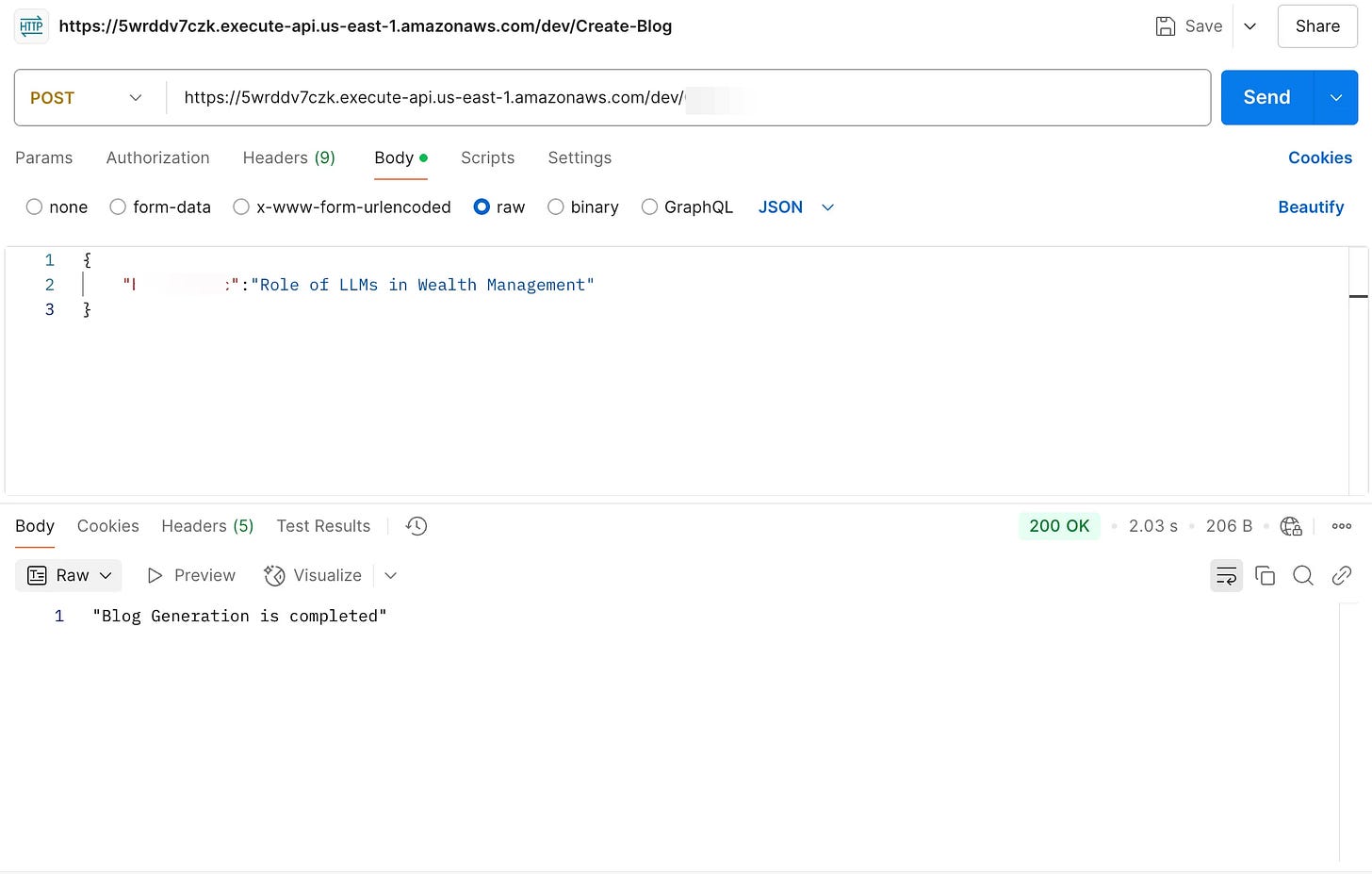

{

"blog_topic":"Role of LLMs in Wealth Management"

}

Click Send and look out for the confirmation

Confirm the response indicates success and verify the generated blog in the S3 bucket.

Download the file and check the output. Below is what was generated.

Large Language Models (LLMs) are transforming wealth management by enhancing efficiency, personalization, and decision-making. These AI-driven tools process vast datasets to generate insights for portfolio analysis, risk assessment, and investment strategies, often matching or exceeding human expertise in certain areas. LLMs streamline repetitive tasks like client communication, reporting, and compliance updates, enabling wealth managers to focus on strategic activities. Domain-specific models, such as Bloomberg GPT, further enhance accuracy by leveraging industry-specific data. They also personalize financial advice by tailoring recommendations to individual client profiles based on risk tolerance and goals. However, challenges like inaccuracies, biases, and loss of context require oversight and integration with traditional financial models to ensure reliability and compliance. By combining LLMs with structured financial tools and cloud platforms, firms can deliver scalable, data-driven solutions while mitigating risks. This integration positions wealth managers to improve client engagement and operational efficiency in a rapidly evolving industry.

What’s coming

This hands-on implementation demonstrates how to use AWS services to build a generative AI application. The ability to seamlessly integrate services like Bedrock, Lambda, API Gateway, and S3 empowers developers to create scalable and cost-effective solutions.

Stay tuned for the next installment, where I’ll explore vector databases and retrieval-augmented generation (RAG) to build more sophisticated use cases. Generative AI on the cloud is truly the future.